When it comes to crime and punishment, how judges dish out prison sentences is anything but a game.

When it comes to crime and punishment, how judges dish out prison sentences is anything but a game.

But students from the University of Utah have created a new mobile game for the iPhone and Android devices that demonstrates how software algorithms used by many of the nation’s judicial courts to evaluate defendants could be biased like humans.

The students, part of a university honors class this semester called When Machines Decide: The Promise and Peril of Living in a Data-Driven Society, were tasked with creating a mobile app that teaches the public how a machine-learning algorithm could develop certain prejudices.

“It was created to show that when you start using algorithms for this purpose there are unintended and surprising consequences,” says Suresh Venkatasubramanian, associate professor in the U’s School of Computing who helped the students develop the app and taught the class with U honors and law professor (lecturer) Randy Dryer. “The algorithm can perceive patterns in human decision-making that are either deeply buried within ourselves or are just false.”

When determining bail or sentencing, many judicial courts use software programs with sophisticated algorithms to help assess a defendant’s risk of flight or of committing another crime. It is similar to how many companies use software algorithms to help narrow the search field of job applicants. But Venkatasubramanian’s research into machine learning argues these kinds of algorithms could be flawed.

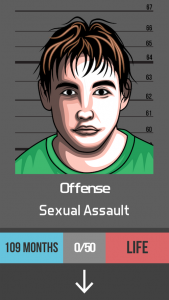

Using the Justice.exe game is simple: It shows players the mugshot of a criminal defendant, his or her offense and both the minimum and maximum sentence. Additional information about the defendant is provided including education level, the number of prior offenses, marital status and race.

The player’s job is to decide if the defendant should get the minimum or maximum sentence. Fifty defendants are provided for the player to go through. During the course of the game, the app will begin eliminating certain pieces of information — such as the person’s race — so the player must decide on a sentence with less facts to go on. Meanwhile, the app is adjusting its own algorithm model in order to try and predict how the player might sentence future defendants.

“What you’re doing is creating the data that the algorithm is using to build the predictor,” Venkatasubramanian says about how the game works. “The player is generating the data by their decisions that is then put into a learner that generates a model. This is how every single machine-learning algorithm works.”

At the end of the game, the app tries to determine how the player sentences defendants based on race, type of offense and criminal history. The point of the game is to show players that how they intended to mete out punishment may not be how the algorithm perceived it.

“Algorithms are everywhere, silently operating in the background and making decisions that humans used to make,” Dryer says. “The machine does not necessarily make better or more fair decisions, and the game was designed to illustrate that fact,”

The honors class, comprised of nine students from departments such as bioengineering, School of Computing, nursing and business, also gave a presentation to the Utah Sentencing Commission earlier this month to demonstrate how algorithms can be biased and gave recommendations on how to approach the problem.

“There are things you should be asking and things you should be doing as policy makers. For the public, you need to know what kinds of questions you should be asking of yourself and of your elected representatives if they choose to use this,” Venkatasubramanian says. “The problem is there aren’t good answers to these questions, but this is about being aware of these issues.”

This news release and photos may be downloaded from: unews.utah.edu.